Finance + AI

⭐ Before we start

Financial Spreader: A tool used to extract scanned images, PDF's, excel sheets or web pages and convert them into clean structured data like excel.

Issue: The company's Financial Spreader tool has been stagnated within 7 client accounts only. It hasn't been able to breakout into the target client accounts since a past 2 years.

Goal: To re-envision the flagship 5-year old 'Financial Spreader' called Fura with all the feature requests from existing client accounts, re-package a new data-extraction model and launch it for the next 800+ SMEs (Subject Matter Experts) in the company.

Financial spreading is one of the most time-consuming work items of a service company. The manual transfer of data from physical/images/PDF to digital formats is error prone, slow, and at times daunting. With evolution of better screen reading and relational models in understanding financial statements, the premise is: Can we re-envision FURA for next 5 years?

One of the biggest problems for the financial services.

About ~12 Million$ just comes through financial spreading

Efficiency is the highest business priority at the moment.

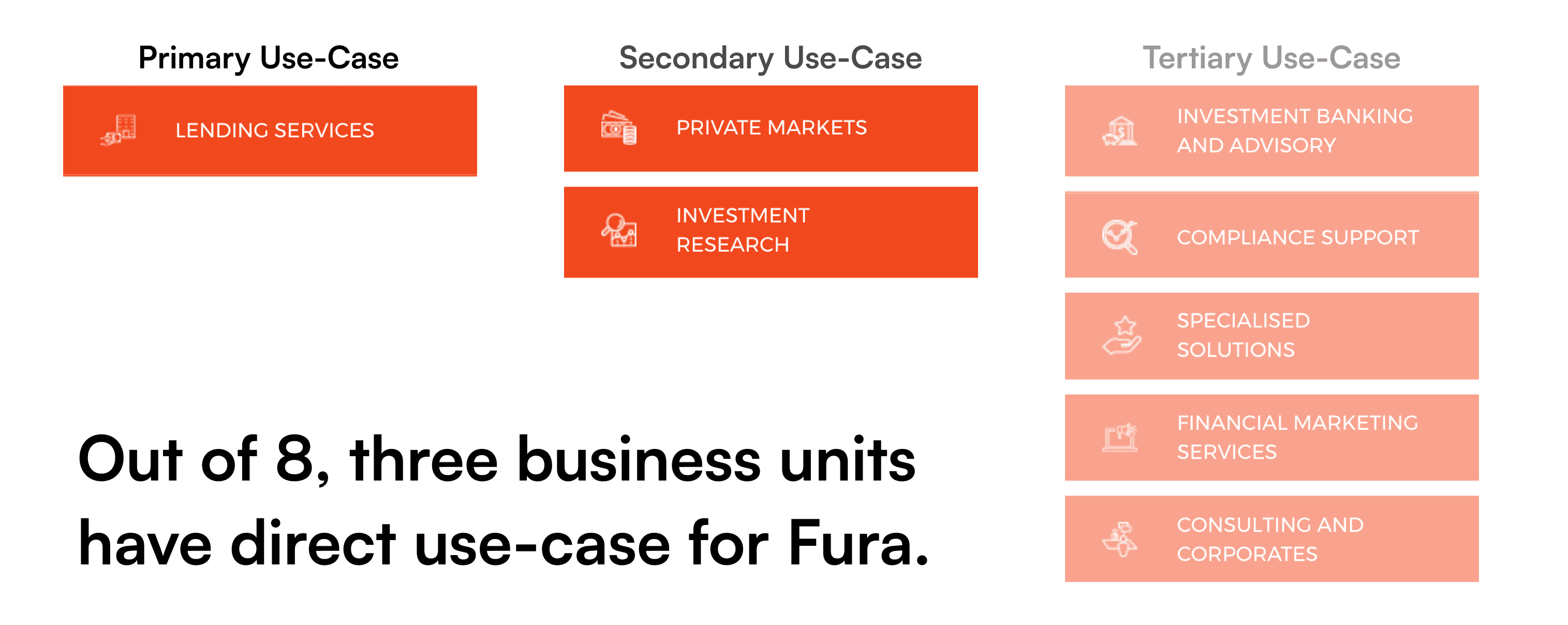

Where this product sits in the company ecosystem?

Acuity Knowledge Partners is the world's number one Knowledge Process Outsourcing (KPO) company by revenue. With over 6000+ headcount, Acuity acts as a quasi-captive for banking & financial institutions worldwide with its 8 service lines. The company draws 100's of contracts every year of high complexity non-automatable work which it has continue to nail in the last 20 years history.

The product belongs to "Lending Services" Business Unit with 913 SME's.

Directly impacts 37%* business, indirectly assists 68%* businesses.

Almost everyone in Acuity spreads and generates documents.

*The following figures are estimated by the virtue of headcount and service lines.

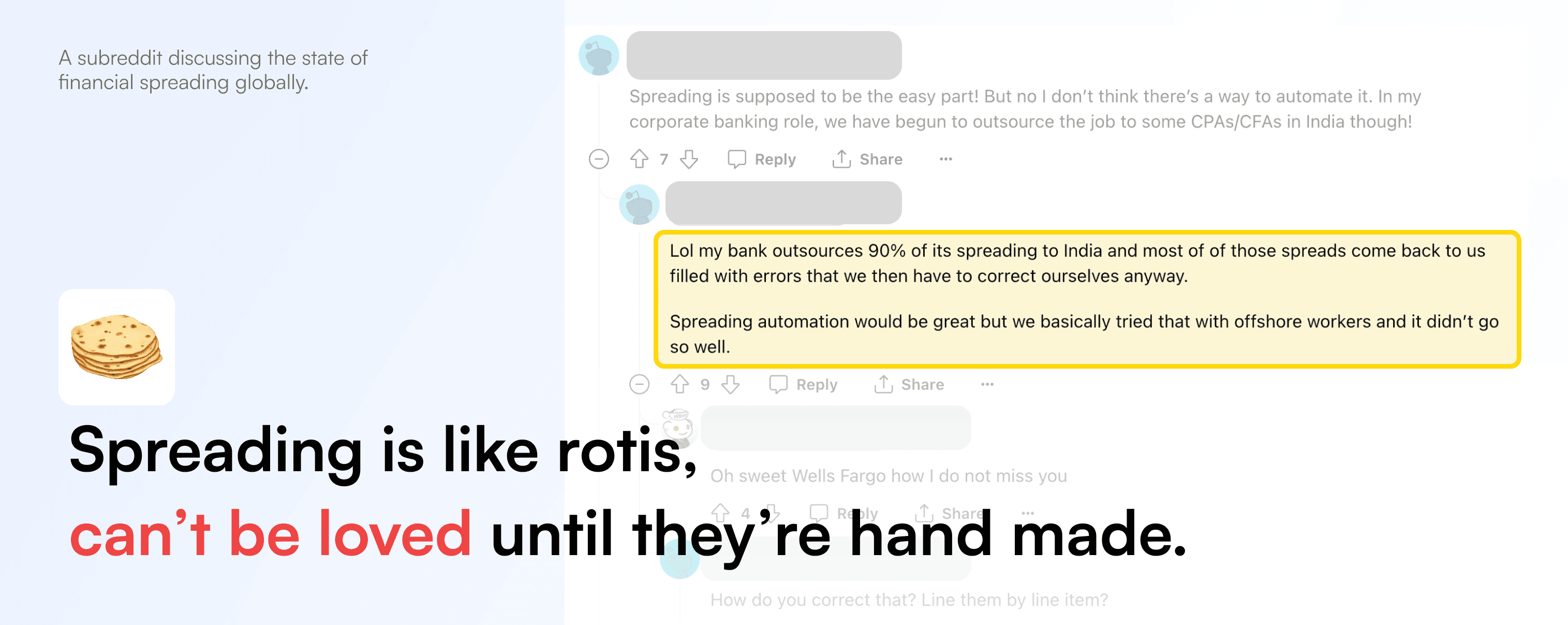

Why Financial Spreading is a pain in the a**?

With earlier context, we understood spreading consumes the most amount of time in our several service lines. With human intervention involved all across the only way spreading time can be made transparent and objective is after activity is complete. With time taken anywhere between 30minutes to 3hours, its a true black box process with only the number of units clocked at the end as billable. Here's why this use-case is not ignorable:

Every finance professional spreads in some form or the other

Its a recurring time-spend again and again

A small value mismatch causes the entire work to be reviewed again

How the case for re-look came into existence?

Acuity is not a SaaS company, but rather a managed service company. The tools we develop are 100% designed for efficiency and service fulfilment. We may/may not go the SaaS route, but eventually this totally depends upon the market conditions and hence the defensibility aspect comes into play. The key reasons why relook was called for:

Client growth had plateaued, and new features demanded by other client accounts.

Market conditions evolving and a need for a more digitized workforce.

A more competitive product that unlocks tangible efficiencies for prospective clients.

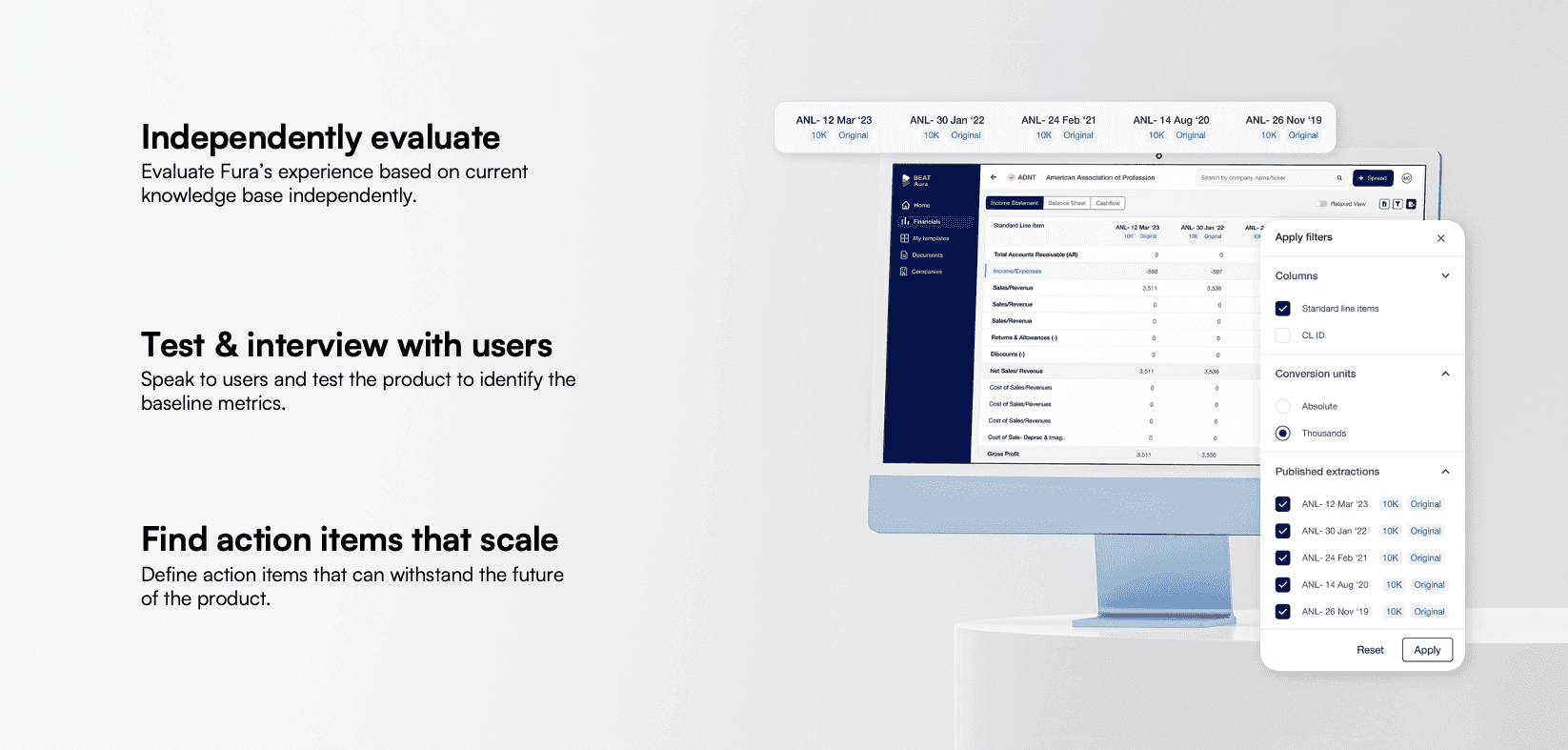

Establishing baseline user feed-back on the current product

The original tool designed in 2019 did pretty well. We started probing around on what can be improved and a good start point were the SMEs themselves. The users were generally using the tool on and off They said they resorted to manual methods when the results were poor.

Among the 173 users who were observed currently 153 have logged in at least once in the last 30 days.

The 153 analysts who were using the product resorted to manual methods as well.

They had feature requests but no clear direction on what exactly went wrong with poor results.

The Objective

Review the primary workflow of Fura and integrate new machine learning capabilities by our updated extraction engine. To integrate more contextual notes to have a more life-like spreading structure. To include a better summary. To increase SME’s efficiency by reducing manual work in their day jobs.

High level objectives of this specific user research and redesign exercise

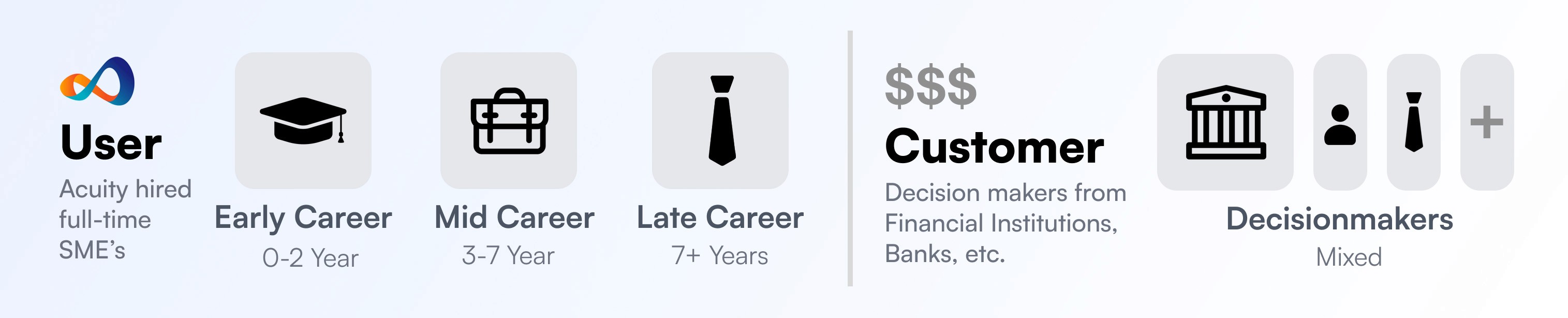

User Group

The primary user group is the Lending Services SME's with varying degree of work experience and spreading requirements. They fundamentally manually spread at the moment, and some teams have spreading automations cleared by a handful of client accounts. Majority have never used any automations in their career and mostly trust their own work instead of any machines. The potential users are however supportive of the testing journey as long as they are credited for it and is done on company time.

Product Components

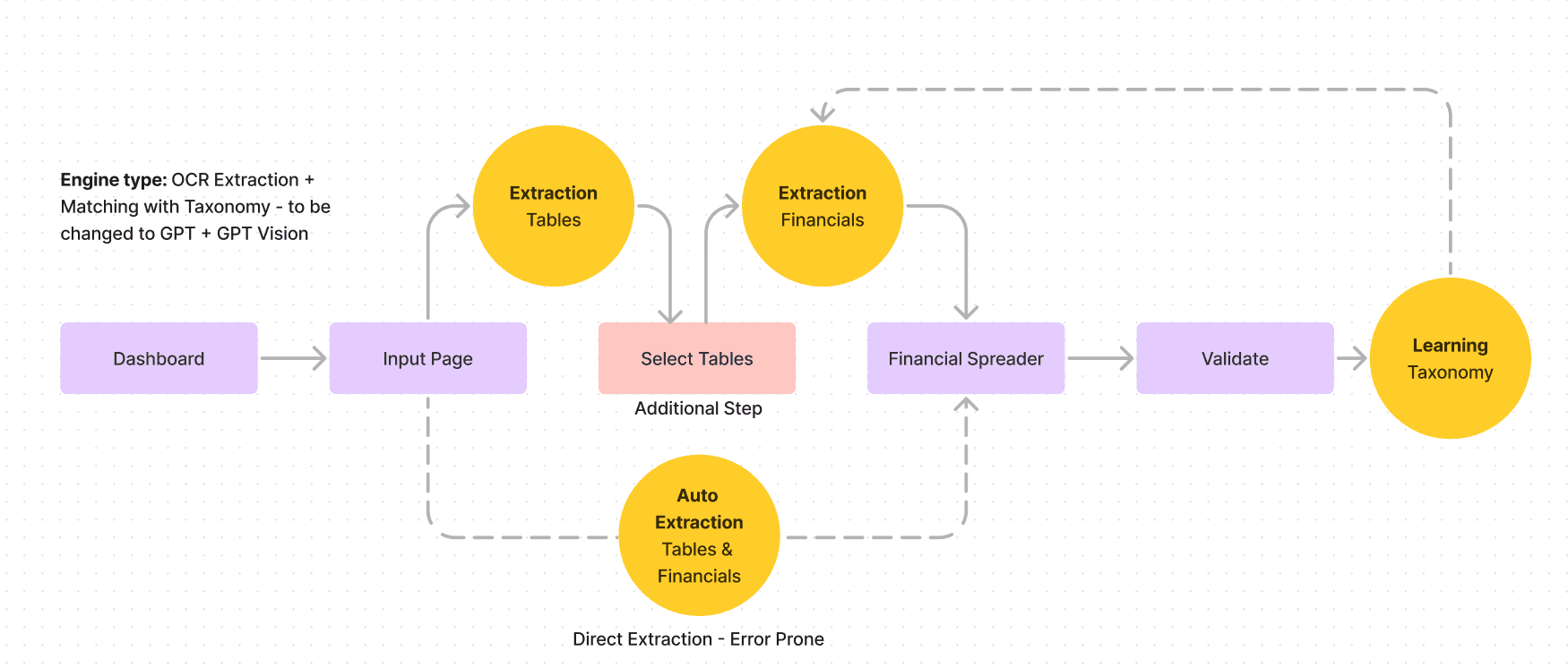

The core journey of BEAT Fura is extracting any kind of document. In the following journey we are only focusing on the PDF journey specifically applicable for private companies. Idea is to explore:

What’s working / What’s not working / What are we tweaking.

Which personas does it impact?

How much potential retention/time savings it can impact?

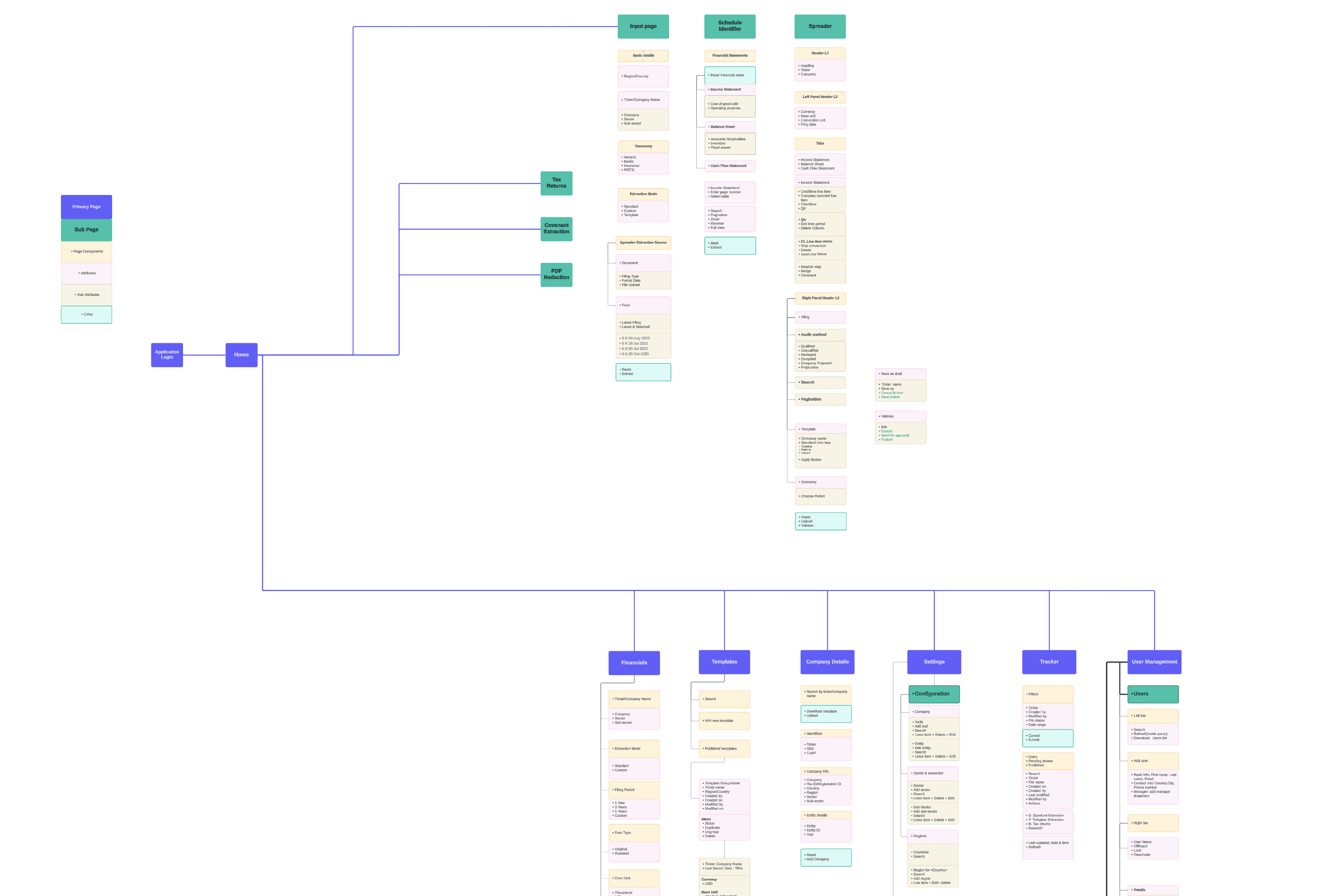

Existing Information Architecture

Primary journey of the product with engine at play

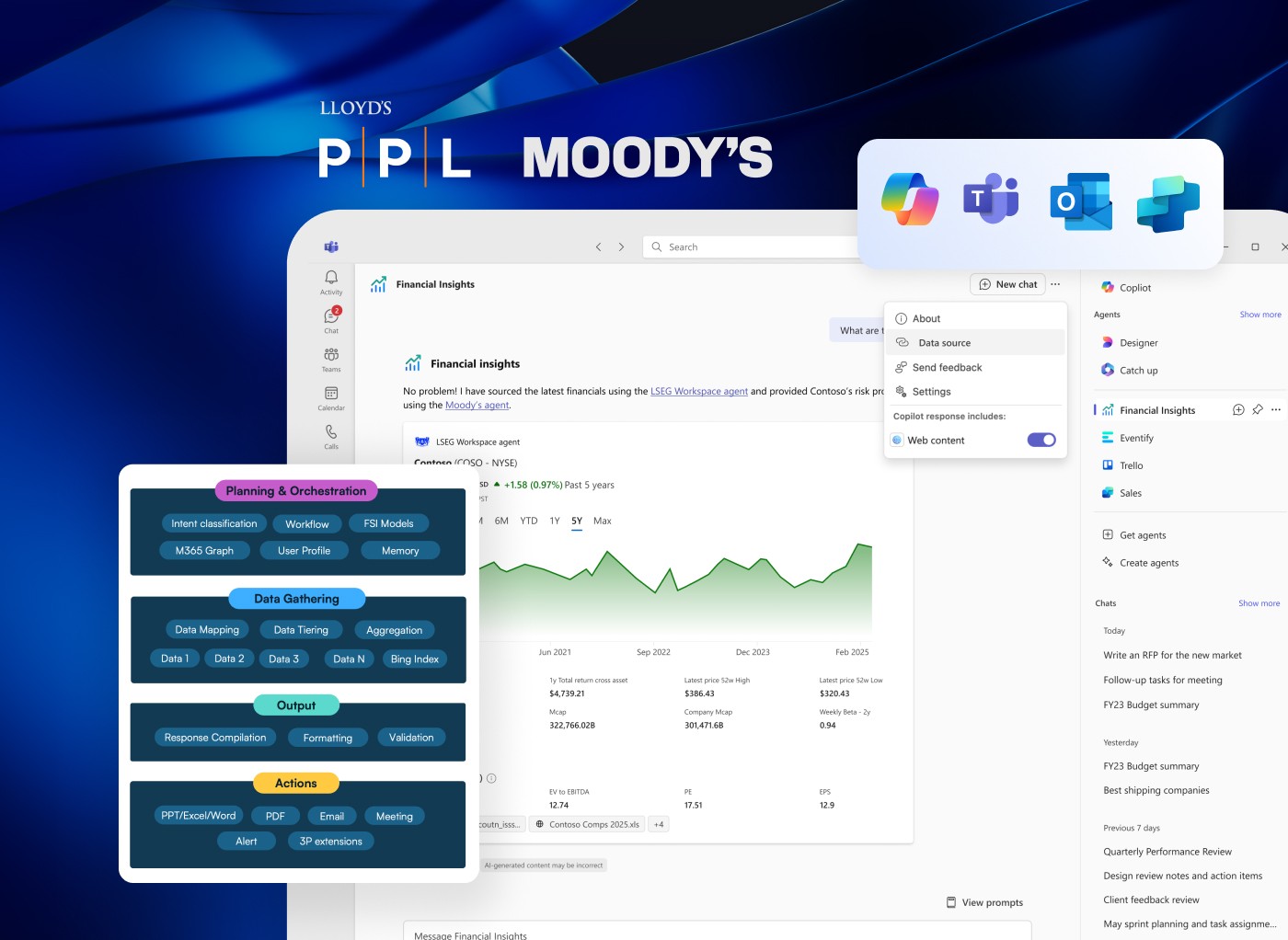

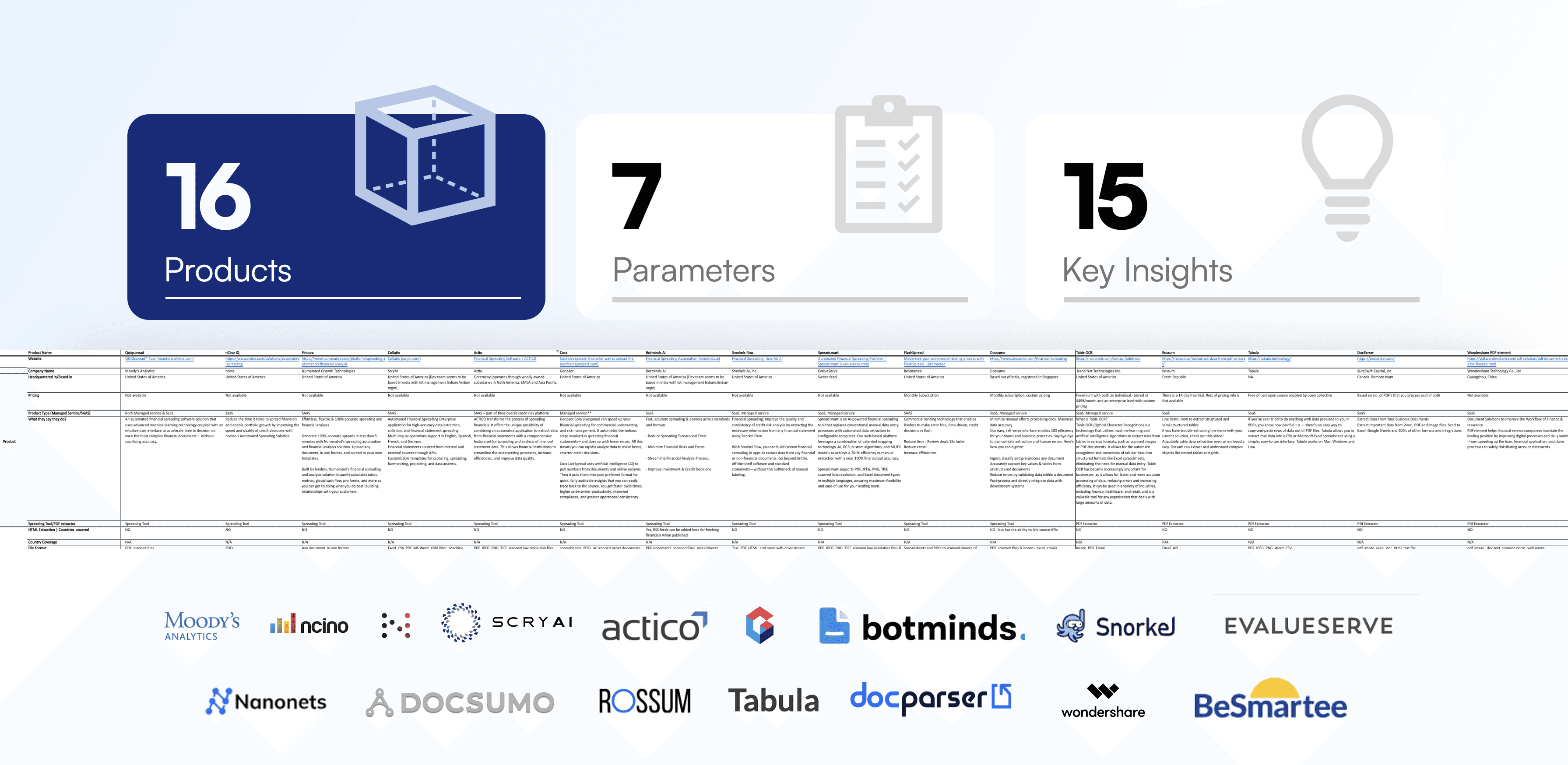

Competitor Study: Where do we stand in the market?

We looked at 16 different products across 7 parameters to understand the spreading ecosystem. While every spreading tool promises the world to its users, there is no clear winner in this space which was quite fascinating to know. The market is big, yet there were no big players like Google or Microsoft which actually produce some of the go-to apps when it comes to this. This made us wonder if the use-case we chasing are actually too niche to the market and thereby the fact that Acuity receives some of the most un-automatable tasks of the world.

Competitor Study Overview

Some of the screens to be presented to management, to show where the market is at

We also visually evaluated the strategies taken by these products one after another to ensure we learn how they solve specific problems in a similar problem statement. Although all the products mostly are kept undercover and not revealed until we book an official demo and subscribing a trial - to accelerate this we opted a different strategy altogether. We looked at B2B apps to understand what's market like, while B2C spreading apps to decipher what's the top line features the landscape is now providing. B2C have more liberal access to their tools with free demos and trials available off the shelf.

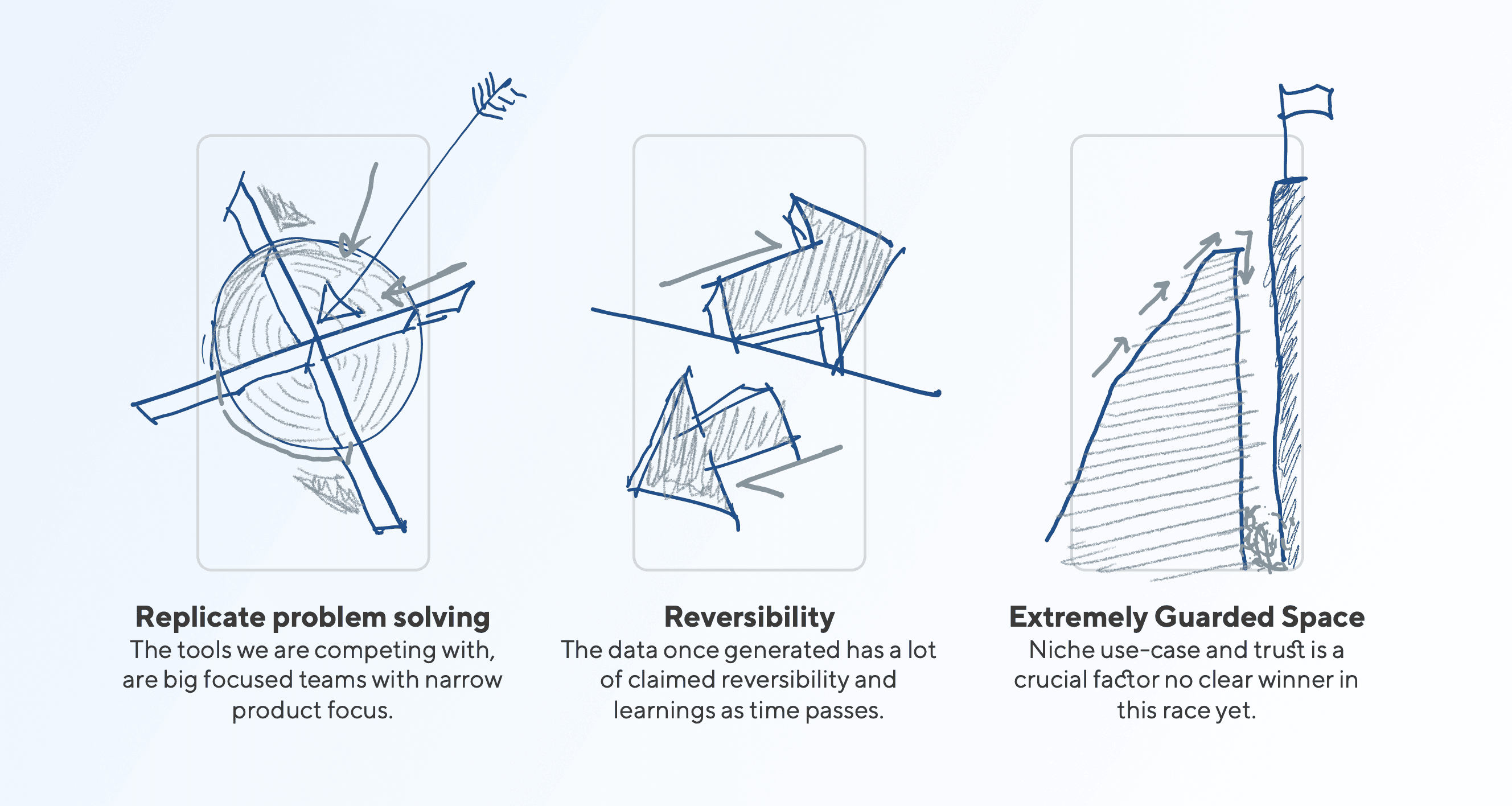

Key takeaways from the competitor study

While realizing a few of these apps have a much richer looking feature sets, its not ideal to chase the best- looking product in the market in terms of feature set. We replicate problem solving instead of the tool itself when it they have a better solution. A key value the new-age tools focus upon is the reversibility aspect which currently Fura suffers a lot on. The entire product is designed to be a one-way journey creating a lot of pressure on analyst to be right all along.

Lastly, the absence of any big players in this particular space explains that its one of those tasks that finance professionals across the world don't really trust machines on.

Hypothesis: User-Experience + Performance of the tool is fairly poor to generate organic adoption among SME's of new client accounts.

Of course, there are many things that may be broken with the app, but this is the baseline hypothesis we open the user research with. Here's the list of modules we will check the product for to evaluate the levels of issues found in the product.

Usability Test & User Interviews with 17 SME's reveal the product gets the job done, however there's a good room for improvement.

The usability test was conducted with 2 batches of users, A - Experienced with Automations & B - Fresh users who haven't used any automation. The task was to spread a public listed company using the spreader without using any other app. The users were given an hour to complete the task with 30minutes briefing on the test.

Usability Tests Overview

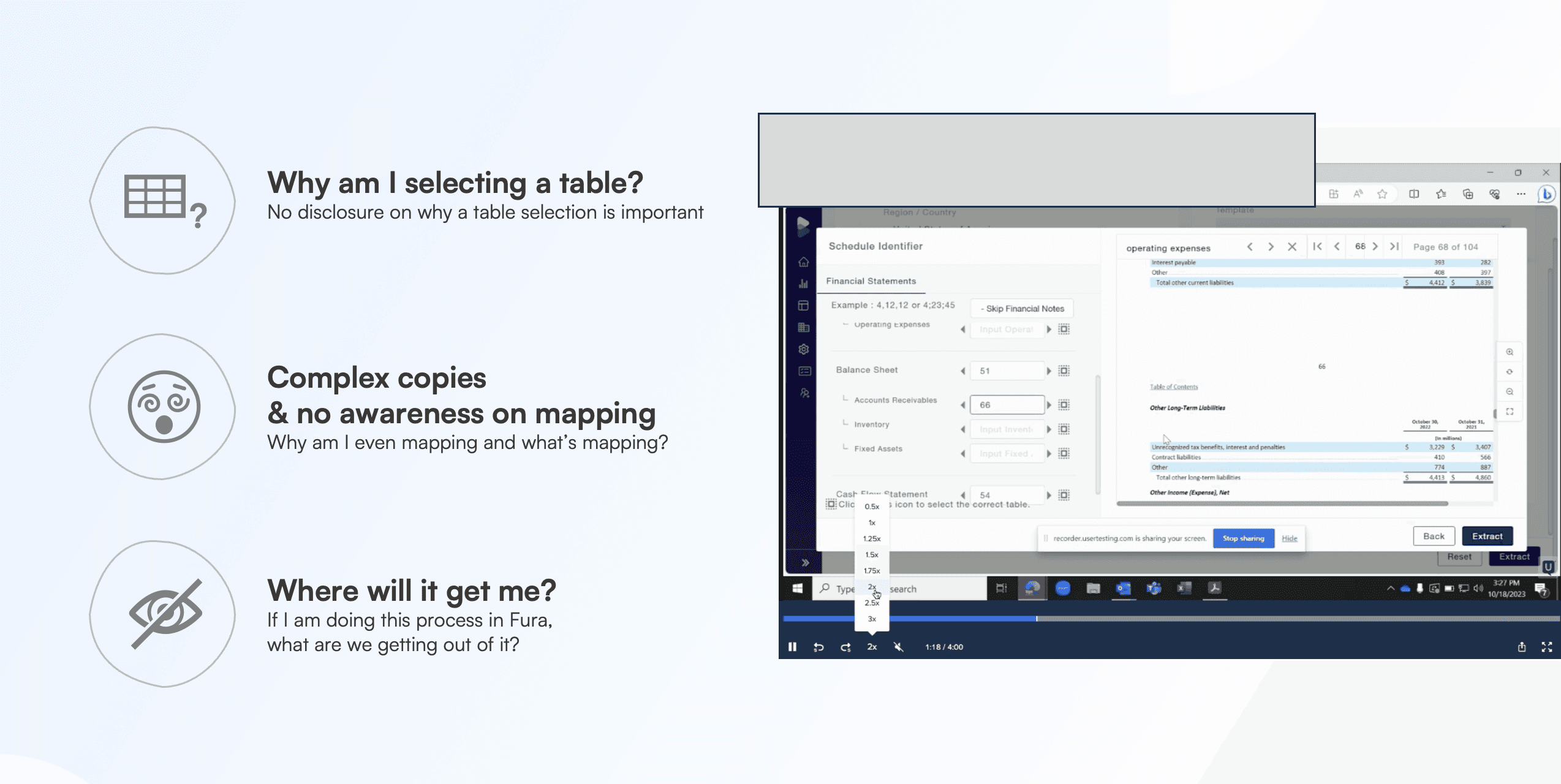

Recordings reveal mixed emotions like these.

The users don’t know its a progressively learning tool: This is only a tell tale story and not a fact baked into the product experience.

The copies were too technical for them: When we asked the users what 'Schedule Identifier' page means what, they were at times clueless because each team called a particular item by a different term.

They really don’t know when to use the tool and when to manually punch values: Fura is a tool that's good at certain documents and is many a times not, the tool doesn't shed light early on whether it can get the job done or not.

SME’s prefer excel UI more than our UI (Honestly): People in finance generally don't want to learn another tool as they have a 100's of SaaS products designed for them anyway. Familiarity may get us a way back in.

There were no keyboard optimizations: A simple spreading activity took a lot of time because there was way too much switching between keyboard and mouse with a ton of repeated actions.

Tasks took more clicks and a mix of mouse and keyboard which made workflows longer: The spreading time was generally hit by the fact that it had very limited bulk actions.

Emotional dilemma of making machines smarter: They have an impression that financial spreaders will in a way kill their job and hence there's not a naturally leaning tendency towards product investment.

Product still hosts deprioritized/not value driving feature: There are several features still present in the product which are not operational or are redundant but are not plugged out as they break the screens.

Getting lost while table selection and skipping through the checkboxes: While table selection improves accuracy by miles, and yet its one of the most tucked away features that is very hard to find.

and many more…

User Interviews with 10 Commercial Lending SME's reveal these areas of improvements

Manual spreading is still the No. 1 choice: Fura is not the go-to tool at present because of the unreliable results it offers without any disclosure.

In-operational Elements: A good number of features like formulas and PDF redactions have close to zero usage and users don't know when to use these effectively.

Steps that were added burden on user journey: While the product was made iteratively the earlier features of the product were barely touched again.

Financial notes and key spreads: Merging financial notes in the spread were an important requirement.

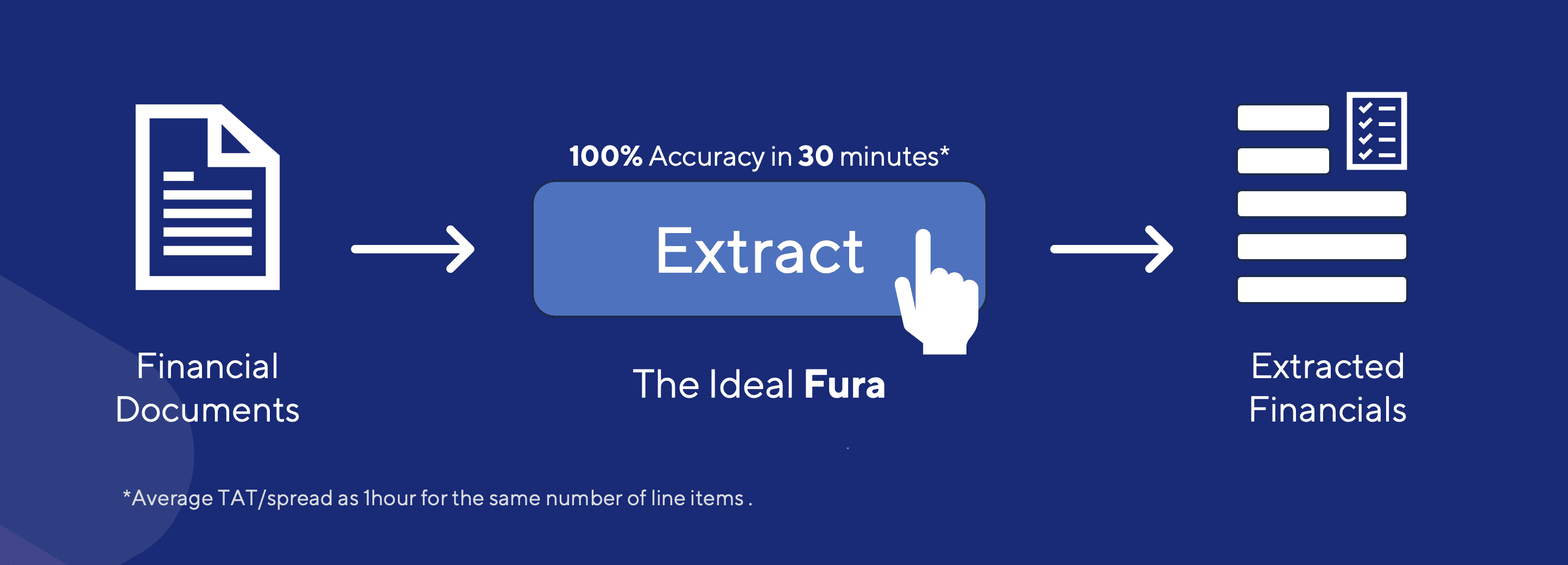

Revelation #1: The biggest issue was not the user experience. It's the expectation mismatch

Realization that the actual product footprint should be as small as a button

While concluding the user research, we realized - burdening SME's with testing the product while expecting new results might be a wrong way of going about the product strategy. The product has to be polished before it is released to more client accounts before it goes ahead, which is a given fact. However these 3 are the key apprehension as to why the product has a neutral impression and at times looked as a hinderance to their day jobs.

Delivery SME’s get fixed spreads boxed in their time available.

If they try Fura extraction they don’t get the right results / it takes more time.

Even if it does take more time, there's no promise the next extraction would be better.

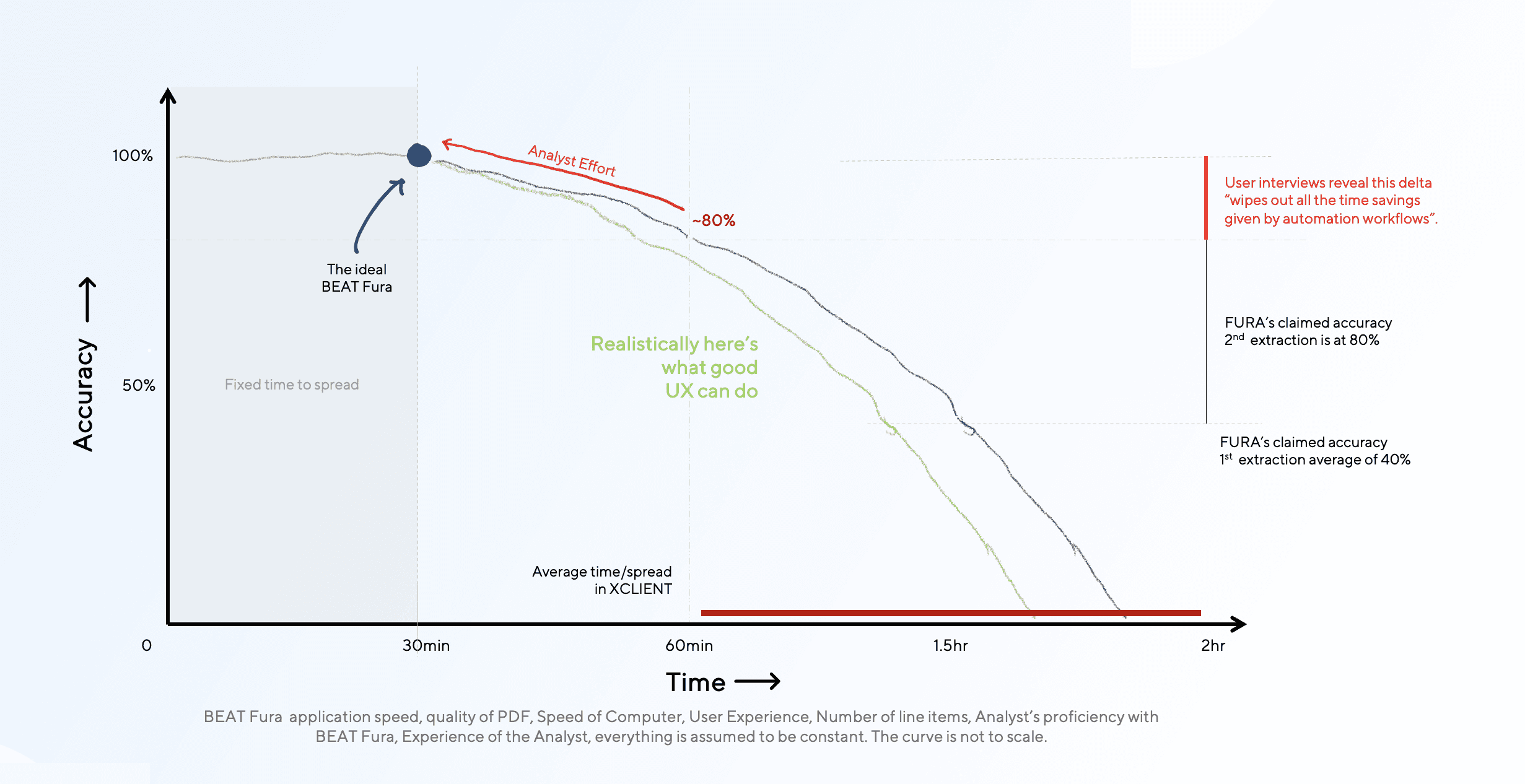

Revelation #2: Accuracy is the star of the show, UX is not

As discussed earlier, despite the world's best UX if the tool is smart enough to figure out what the document actually contains with as little human interaction the better it is. The anti-thesis is, if accuracy fails user experience goes for a complete toss taking more time than actually doing it via manual way.

This feedback was gathered via one of the user interviews and their observation with spreading. We have a few highlevel numbers on where a particular spreading activity takes time at least in the case of Fura. We plotted the same via a concept graph to communicate the UX redesign is possibly a curve wiggler, but accuracy is the curve definer.

Peer Reviews & Final Presentation

Eventually, findings this fundamental can often be borderline delusional and sometimes go outside what company can comprehend from a product perspective. Hence, we had about 7 peer reviews from senior management in the company. A few of these were from business, and a few from product to ensure the vision is reasonable and rightly placed in the company space.

The final presentation was made to an audience of 43 people in the Lending Services and Investment Research, which included people from the usability tests and user interviews.

Duration: 60minutes

Content:

Establishing why this overhaul was needed.

Where the product actually fails while scaling to other accounts.

Being realistic on what a UX overhaul can do in this kind of a product.

Outcome: A tough pill to swallow, but a necessary one.

An audit presentation at times is an admitting of the things that are wrong with a product. The first response of business was the fact that why the mistakes happened in the first place, which is fairly obvious. The business was pacified with the fact that products like this are a discovery overall and there will be once in a while shedding needed, with another audit happening over this a few years down the line.

However, In a stalemate of the fact that product quality vs. user's ability of tolerance, the higher road of measuring accuracy/progress was rolled out where tangible product improvement metrics delivered right through the app was sought after and the re-design was flagged off.

Financials - Historic Data of all the previously spreaded companies (Before & After)

Stages of arriving at the intermediate iteration of table selector

A specific use-cases where the product was evolved

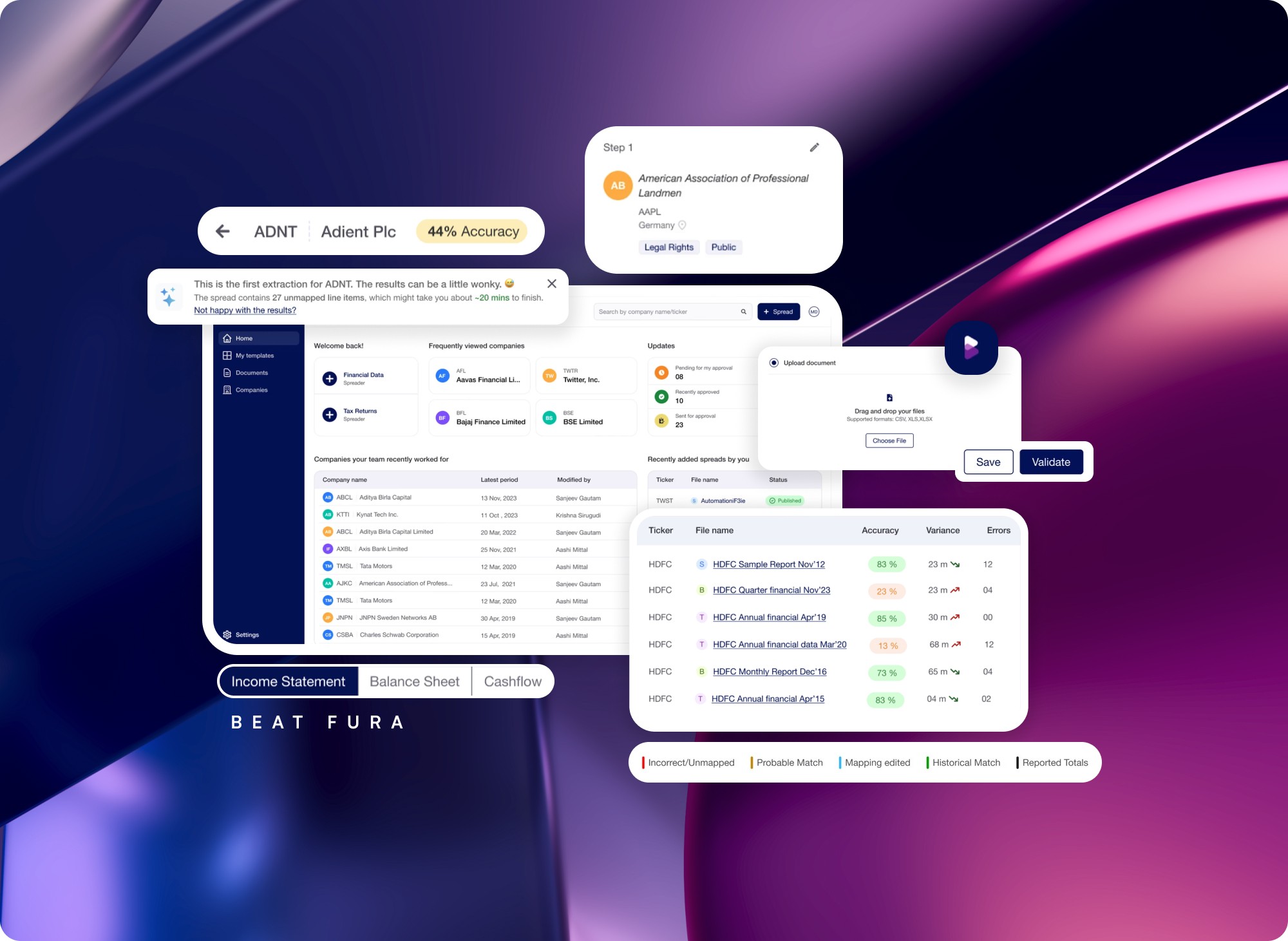

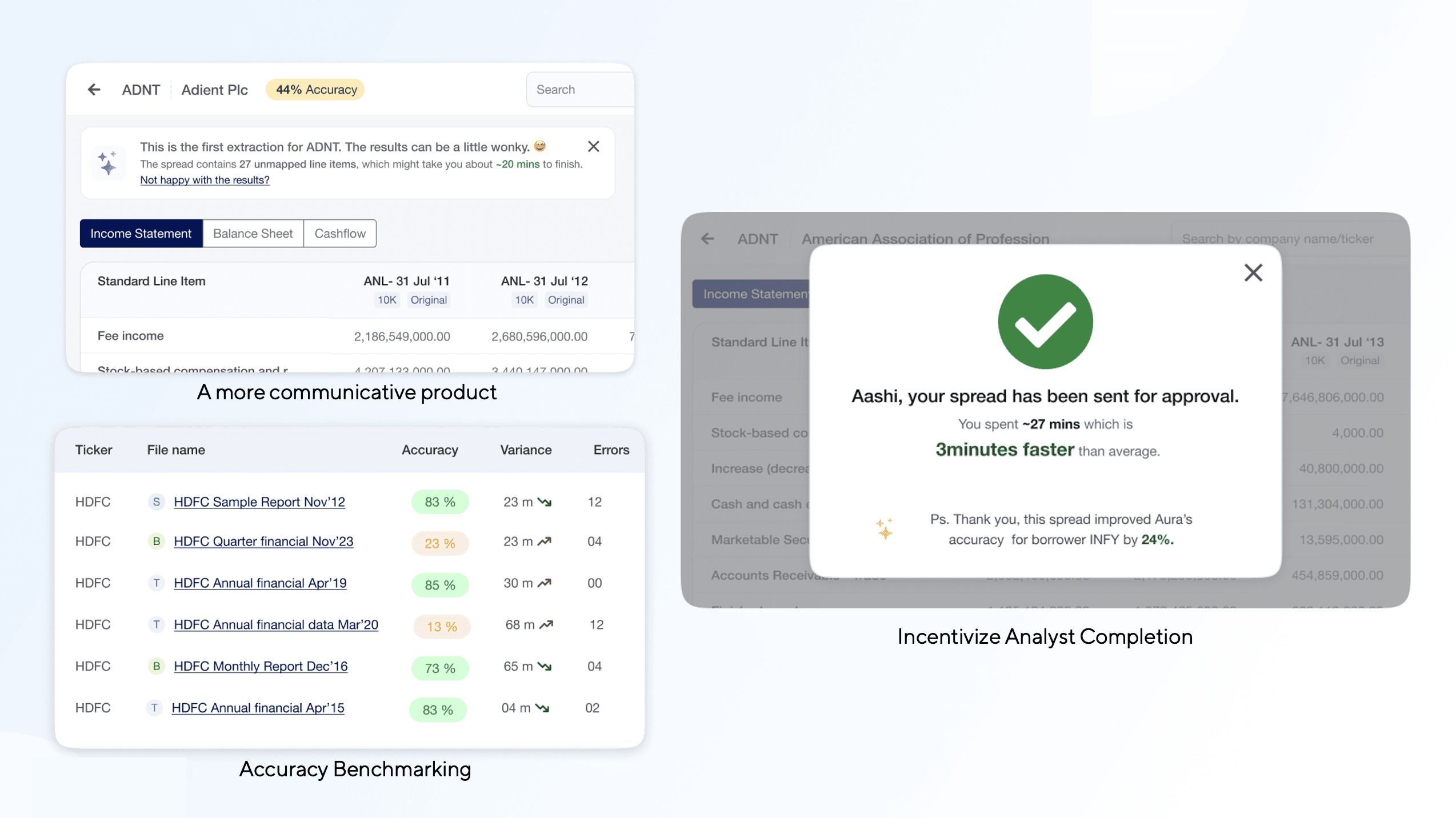

Dashboard revamp: We made sure the dashboard shared what the entire team was doing to make it the starting point of any spreading activity, thereby showing the group where the work was at right at ground zero. Something that excel doesn't offer.

Input page evolution: We made the input page more instructional first, it begins from where you left off just to save time right at the start.

Schedule identifier evolution: Earlier the product philosophy was "if you don't tell me how we'll give you results that are wrong and then you'll have to fix it". This time around SME’s are mostly reviewing and approving what's the product giving.

Summary on the front in spreading: Ensuring correct values through calculated figures that are upfront allow for faster quality assurance flow.

Optimizing the mapping action: From 3 clicks + 2 Types to 1 click + 1 Type ensuring faster mapping discovery.

Measuring and optimizing time to spread / Outset Disclosure: Telling how much time the process takes on an average

Breaking feedback for which team to act on (Alexandria, Tech-team, UX team, QA team)

Memorized clicking (Overlapping multiple forward actions and typing actions)

Gathering Keyboard and mouse interactions optimizations.

Cycling multiple loading copies - Design System Contribution

Emailing them when it's done - Tying product activities to email notifications to bring users back when a process is done

Showing how well they did since the last time + Contribution to the taxonomy

Results may be inaccurate [Poor PDF]: Sending a nudge that they’re not happy with the speed - 10 SME’s have reported this, and work has already commenced.

Borrower level Accuracy on view financials: Getting one borrower right at a time

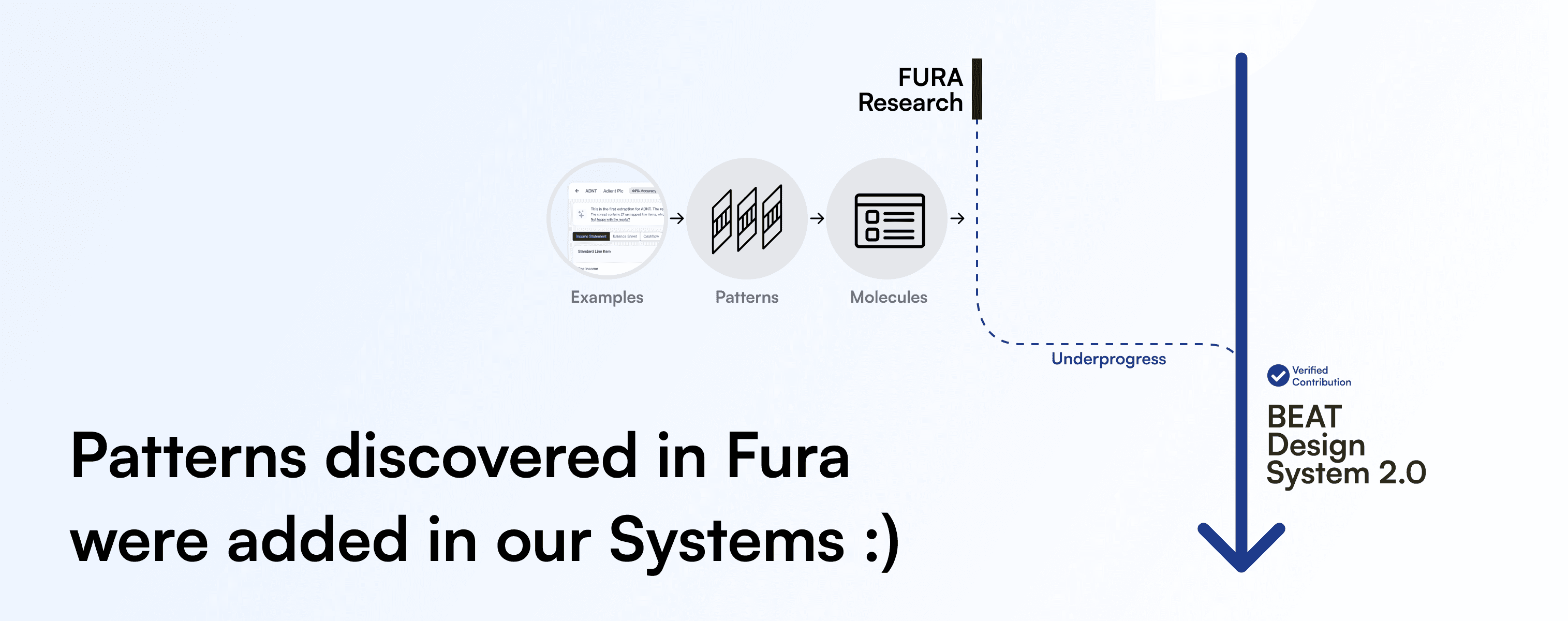

Design System Contribution: Transparency Driven Improvement

The research and insights derived on older products with stagnated usage, a good way of refreshing new changes and communicating current level of tool proficiency openly. These aspects were found helpful as a way of incentivizing a user of atleast contributing towards something tangible (As tangible as sharing a screenshot of this in their annual reviews). This helps quantify time of analysts on the app.

The qualities of this research were appreciated, and patterns are currently being designed for the entire design system of BEAT. The toolkit will allow to boost retention and track usage more openly, thereby more synchronization between business and product.

Iterations: Table Selector - List of Tables & Notes

In the journey of a redesign, there are 100's of incumbent perspectives, and the goal is consolidated most of them in a collectively agreed tradeoff by the team. The following is one of such micro-journeys where we were exploring the tree structure of notes/schedules in a spread - of making user aware how important this function is. Some of the parts of the journey are demonstrated here:

Table selection made simpler - Before & After

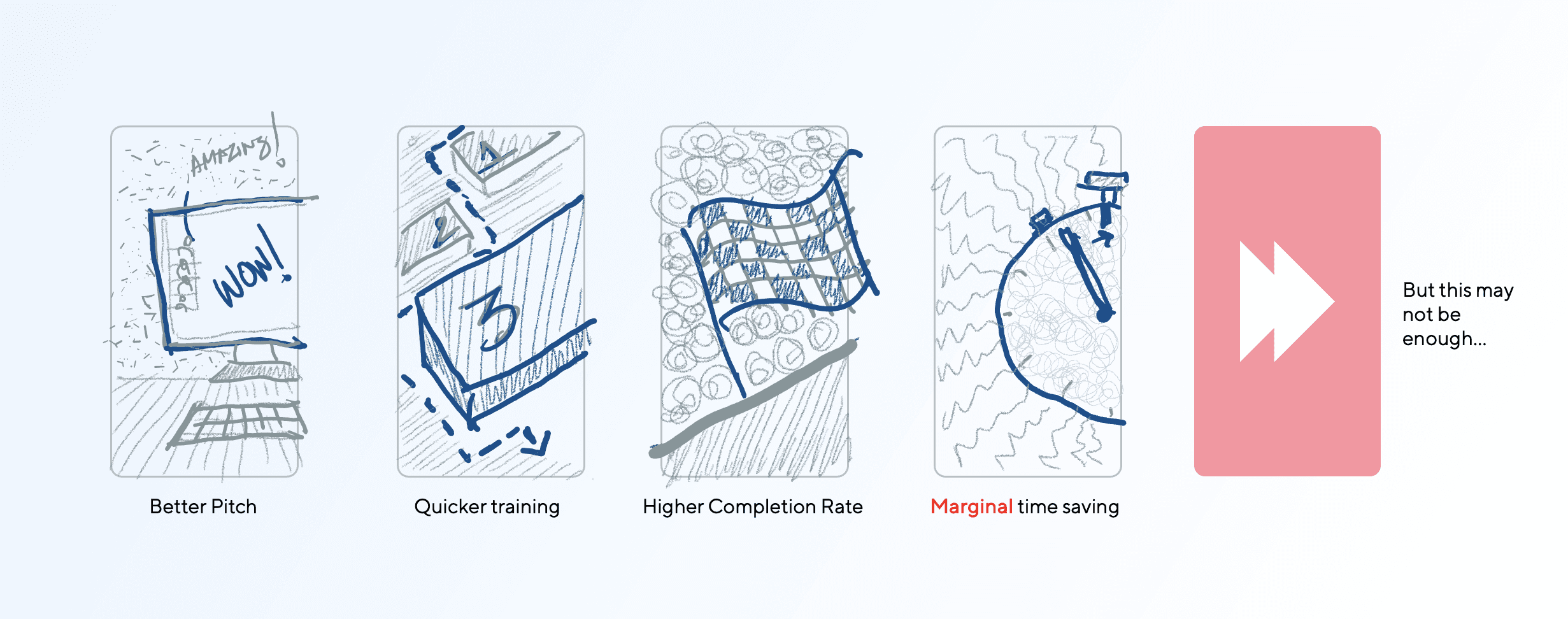

Measuring success: Average spreading time for first spread down by 23% and task completion score now up by 21%*.

*The redesign pitching with 4 new confirmed client accounts yielded measurable impact on sales side in the managed service thereby instilling more confidence in clients. The tool tested with 19 new users yielded faster time to spread with average time per line item down by 13 seconds with a 2x task completion rate right from the journey zero without training.

On the unmeasured side, the tool with self-learnable labels like coach marks, allows for quicker demos/trainings. The completion rate hence may improve drastically because of automations and memory in the tool of previously run spreads. However, the time-savings may not be as much and here's why.

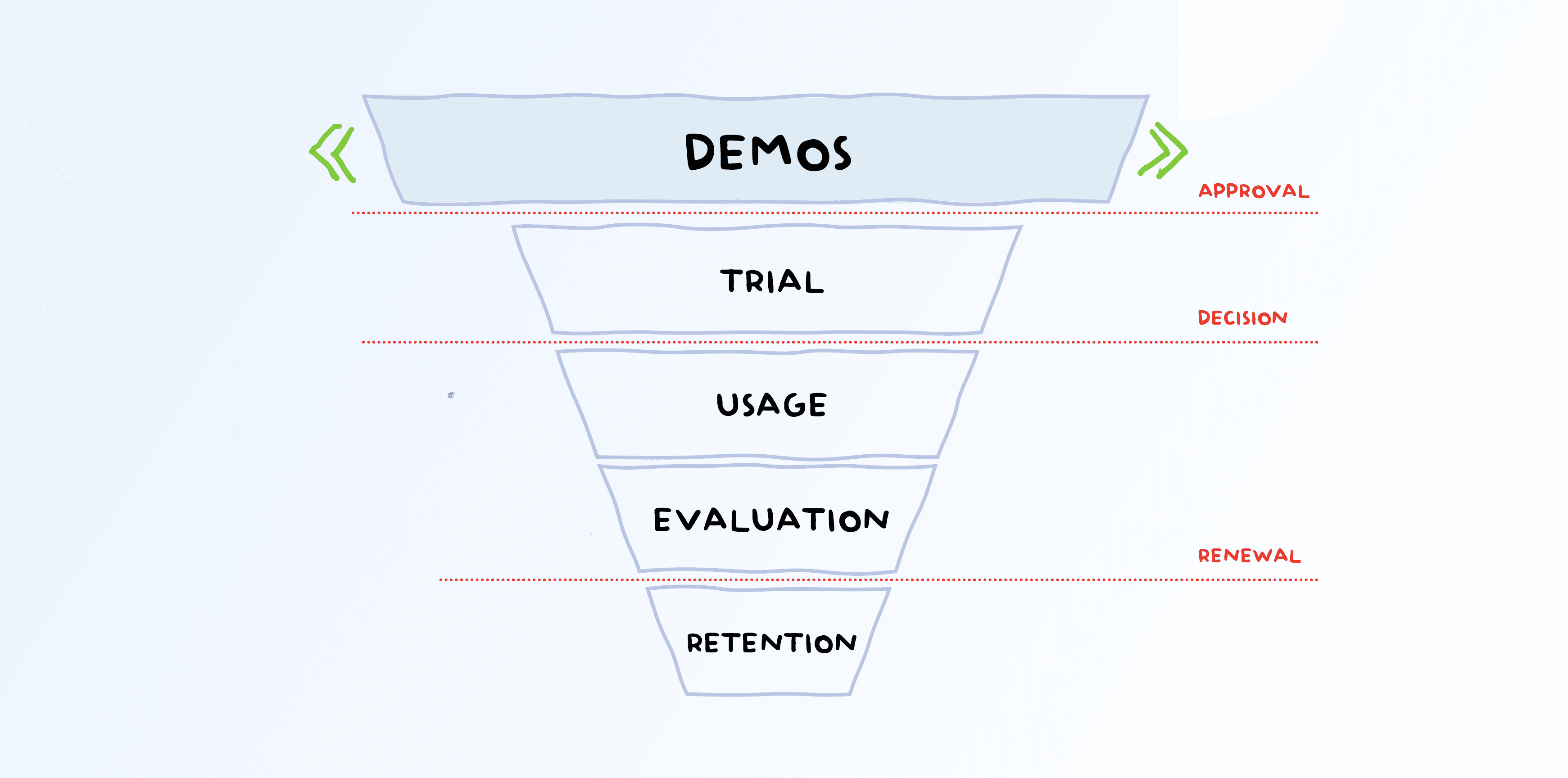

As the Accuracy against UX graph clearly establishes that accuracy is the defining virtue of a product like this. However, in the long-term journey of SaaS sales funnel, this redesign will 100% expand the demos we get - just by product's experience and feel. The pilot validates this.

The later stages of the funnel namely, Trial > Usage > Evaluation > Retention are a larger battle to be endured by all the aspect including performance, data quality, application speed and user friendliness.